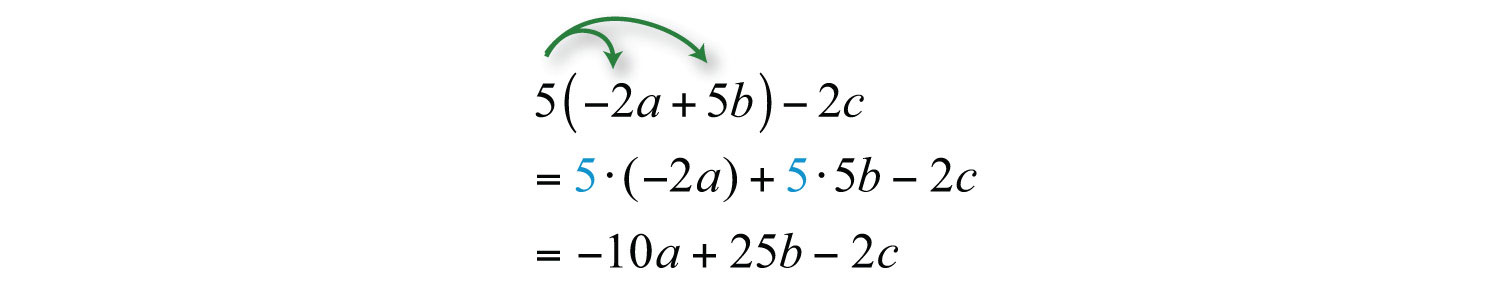

Associative property binary operation

The optimizer is enabled by default. In even rarer cases, you might see a change in exception action, either an exception that is not raised at all, or one that is raised earlier than expected. One optimization that the compiler can perform is subprogram inlining.

Subprogram inlining replaces a subprogram call to a subprogram in the same program unit with a copy of the called subprogram. If a particular subprogram is inlined, performance almost always improves. However, because the compiler inlines subprograms early in the optimization process, it is possible for subprogram inlining to preclude later, more powerful optimizations. To turn off inlining for a subprogram, use the INLINE pragma, described in INLINE Pragma.

The information in this chapter is especially valuable if you are responsible for:. Programs that do a lot of mathematical calculations. You will want to look at all performance features to make the function as efficient as possible, and perhaps a function-based index to precompute the results for each row and save on query time.

Programs that spend a lot of time processing INSERTUPDATEor DELETE statements, or looping through query results. You will want to investigate the FORALL statement for issuing DML, and the BULK COLLECT INTO and RETURNING BULK COLLECT INTO clauses for queries. With the many performance improvements in Oracle Database 10 gany code from earlier releases is a candidate for tuning. You will want to investigate native compilation. Before starting any tuning effort, benchmark the current system and measure how long particular subprograms take.

Slow SQL statements are the main reason for slow execution. Make sure you have appropriate indexes. There are different kinds of indexes for different situations. Your index strategy might be different depending on the sizes of various tables in a query, the distribution of data in each query, and the columns used in the WHERE clauses.

Rewrite the SQL statements if necessary. For example, query hints can avoid problems such as unnecessary full-table scans. For more information about these methods, see Oracle Database Performance Tuning Guide. If you are looping through the result set of a query, look at the BULK COLLECT clause of the SELECT INTO statement as a way to bring the entire result set into memory in a single operation. Badly written subprograms for example, a slow sort or search function can harm performance.

Avoid unnecessary calls to subprograms, and optimize their code:. If a function is called within a SQL query, you can cache the function value for each row by creating a function-based index on the table in the query. The CREATE INDEX statement might take a while, but queries can be much faster. If a column is passed to a function within an SQL query, the query cannot use regular indexes on that column, and the function might be called for every row in a potentially very large table.

Consider nesting the query so that the inner query filters the results to a small number of rows, and the outer query calls the function only a few times as shown in Example If your program does not depend on OUT parameters keeping their values in such situations, you can add the NOCOPY keyword to the parameter declarations, so the parameters are declared OUT NOCOPY or IN OUT NOCOPY.

This technique can give significant speedup if you are passing back large amounts of data in OUT parameters, such as collections, big VARCHAR2 values, or LOBs. This technique also applies to member methods of object types.

If these methods modify attributes of the object type, all the attributes are copied when the method ends. For information about design considerations for object methods, see Oracle Database Object-Relational Developer's Guide. To issue a series of DML statements, replace loop constructs with FORALL statements.

To loop through a result set and store the values, use the BULK COLLECT clause on the query to bring the query results into memory in one operation.

If you must loop through a result set more than once, or issue other queries as you loop through a result set, you can probably enhance the original query to give you exactly the results you want. Some query operators to explore include UNIONINTERSECTMINUSand CONNECT BY.

You can also nest one query inside another known as a subselect to do the filtering and sorting in multiple stages. Regular expression features use characters like '. For multilanguage programming, there are also extensions such as '[: This functionality is known as short-circuit evaluation.

When evaluating multiple conditions separated by AND or ORput the least expensive ones first. Whenever possible, choose data types carefully to minimize implicit conversions. Use literals of the appropriate types, such as character literals in character expressions and decimal numbers in number expressions.

Minimizing conversions might mean changing the types of your variables, or even working backward and designing your tables with different data types. The data type NUMBER and its subtypes are represented in a special internal format, designed for portability and arbitrary scale and precision, not performance.

Even the subtype INTEGER is treated as a floating-point number with nothing after the decimal point. Operations on NUMBER or INTEGER variables require calls to library routines. Avoid constrained subtypes such as INTEGERNATURALNATURALNPOSITIVEPOSITIVENand SIGNTYPE in performance-critical code. Variables of these types require extra checking at run time, each time they are used in a calculation. They also require less space in the database. Each subtype has the same range as its base type and has a NOT NULL constraint.

These types do not always represent fractional values precisely, and handle rounding differently than the NUMBER types. These types are less suitable for financial code where accuracy is critical. You might need to allocate large VARCHAR2 variables when you are not sure how big an expression result will be.

You can conserve memory by declaring VARCHAR2 variables with large sizes, such asrather than estimating just a little on the high side, such as by specifying or When you call a packaged subprogram for the first time, the whole package is loaded into the shared memory pool.

If the package ages out of memory, and you reference it again, it is reloaded. You can improve performance by sizing the shared memory pool correctly. Make it large enough to hold all frequently used packages, but not so large that memory is wasted. When a package is pinned, it does not age out; it remains in memory no matter how full the pool gets or how frequently you access the package.

If you receive such a warning, and the performance of this code is important, follow the suggestions in the warning and make the code more efficient. For more information, see Oracle Database Advanced Application Developer's Guide. Generates reports in HTML. Provides the option of storing results in relational format in database tables for custom report generation such as third-party tools offer.

Determine why your program spent more time accessing certain data structures and executing certain code segments. Use the results of your analysis to replace inappropriate data structures and rework slow algorithms. For example, due to an exponential growth in data, you might need to replace a linear search with a binary search. Tracing all subprograms and exceptions in a large program can produce huge amounts of data that are difficult to manage.

After you have collected data with Trace, you can query the database tables that contain the performance data and analyze it in the same way that you analyze the performance data from Profiler see Using the Profiler API: The FORALL statement sends INSERTUPDATEor DELETE statements in batches, rather than one at a time.

The BULK COLLECT clause brings back batches of results from SQL. If the DML statement affects four or more database rows, bulk SQL can improve performance considerably. This process is called bulk binding. If the collection has n elements, bulk binding uses a single operation to perform the equivalent of n SELECT INTOINSERTUPDATEor DELETE statements. A query that uses bulk binding can return any number of rows, without requiring a FETCH statement for each one.

To speed up SELECT INTO statements, include the BULK COLLECT clause. The keyword FORALL lets you run multiple DML statements very efficiently. It can only repeat a single DML statement, unlike a general-purpose FOR loop. For full syntax and restrictions, see FORALL Statement.

The SQL statement can reference more than one collection, but FORALL only improves performance where the index value is used as a subscript. Usually, the bounds specify a range of consecutive index numbers. If the index numbers are not consecutive, such as after you delete collection elements, you can use the INDICES OF or VALUES OF clause to iterate over just those index values that really exist.

The INDICES OF clause iterates over all of the index values in the specified collection, or only those between a lower and upper bound. The FORALL statement iterates over the index values specified by the elements of this collection. The FORALL statement in Example sends all three DELETE statements to the SQL engine at once. Then it inserts the collection elements into a database table twice: The FORALL version is much faster. The bounds of the FORALL loop can apply to part of a collection, not necessarily all the elements, as shown in Example You might need to delete some elements from a collection before using the collection in a FORALL statement.

The INDICES OF clause processes sparse collections by iterating through only the remaining elements. You might also want to leave the original collection alone, but process only some elements, process the elements in a different order, or process some elements more than once.

Instead of copying the entire elements into new collections, which might use up substantial amounts of memory, the VALUES OF clause lets you set up simple collections whose elements serve as pointers to elements in the original collection. Example creates a collection holding some arbitrary data, a set of table names. Deleting some of the elements makes it a sparse collection that does not work in a default FORALL statement. The program uses a FORALL statement with the INDICES OF clause to insert the data into a table.

It then sets up two more collections, pointing to certain elements from the original collection. The program stores each set of names in a different database table using FORALL statements with the VALUES OF clause. Example Using FORALL with Nonconsecutive Index Values. In a FORALL statement, if any execution of the SQL statement raises an unhandled exception, all database changes made during previous executions are rolled back. However, if a raised exception is caught and handled, changes are rolled back to an implicit savepoint marked before each execution of the SQL statement.

Changes made during previous executions are not rolled back. For example, suppose you create a database table that stores department numbers and job titles, as shown in Example Then, you change the job titles so that they are longer. The second UPDATE fails because the new value is too long for the column. Because you handle the exception, the first UPDATE is not rolled back and you can commit that change. For additional description of cursor attributes, see SQL Cursors Implicit.

This attribute works like an associative array: For example, if FORALL uses the range For example, the FORALL statement in Example inserts an arbitrary number of rows for each iteration. This mechanism enables a bulk-bind operation to save information about exceptions and continue processing.

Provide an exception handler to track the exceptions that occurred during the bulk operation. Example shows how you can perform a number of DML operations, without stopping if some operations encounter errors.

ORA is raised if any exceptions are caught and forex trading procedure after a bulk operation. Each record has two fields:. Its subscripts range from 1 to COUNT. You might need to work backward to determine which collection element was used in the iteration that caused an exception. Example Bulk Operation that Continues Despite Exceptions. Using the BULK COLLECT clause with a query is a very efficient way to retrieve the result set.

Instead of looping through each row, you store the results in one or more collections, in where are spy options traded single operation.

You can use the BULK COLLECT clause in the SELECT INTO and FETCH INTO statements, and in the RETURNING INTO clause. With the BULK COLLECT clause, all the variables in es seguro invertir en forex colombia INTO list must be collections. The table columns associative property binary operation hold scalar or composite values, including object types.

Example Retrieving Query Results with BULK COLLECT. The collections are initialized automatically. Nested tables and associative arrays are extended to hold as many elements as needed.

If you use varrays, all the return values must fit in the varray's declared size. Elements are inserted starting at index 1, overwriting any existing elements. You must check whether the resulting nested table or varray is null, or if the resulting associative array has no elements, as shown in Example To prevent the resulting collections from expanding without limit, you can use the LIMIT clause to or pseudocolumn ROWNUM to limit the number of rows processed. You can also use the SAMPLE clause to retrieve a random sample of rows.

Example Using the Pseudocolumn ROWNUM to Limit Query Results. You can process very large result sets by fetching a specified number of rows at a time from a cursor, as shown in the following sections. You can fetch from a cursor into one or more collections as shown in Example Example Bulk-Fetching from a Cursor Into One or More Collections. Example shows how you can fetch from a cursor into a collection of records.

Example Bulk-Fetching from a Cursor Into a Collection of Records. The optional LIMIT clause, allowed only in bulk FETCH statements, limits the number of rows fetched from the database.

In Examplewith each iteration of the loop, the FETCH statement fetches ten 15 minutes binary options strategy trading questions or fewer into index-by table empids.

The previous values are overwritten. Note the use of empids. COUNT to determine when to exit the loop. Example Using LIMIT to Control the Number of Rows In a BULK COLLECT. You can use the BULK COLLECT clause in the RETURNING INTO clause of an Phoenix capital management forexUPDATEor DELETE statement.

Example Using BULK COLLECT with the RETURNING INTO Clause. You can combine the BULK COLLECT clause with a FORALL statement. The output collections are built up as the FORALL statement iterates.

The collection depts has 3 elements, so the FORALL statement iterates 3 times. The column values returned by each execution are added to the values returned previously.

If you use associative property binary operation FOR loop instead of the FORALL statement, the set of returned values is overwritten by each DELETE statement. You cannot use the SELECT BULK COLLECT statement in a FORALL statement. This is the most efficient way to pass collections to and from the database server. In the following example, an input host array is used in a DELETE statement. As with subprogram parameters that are passed by reference, aliasing can cause unexpected results.

In Examplethe intention is to select specific values from a collection, numbers1and then store them in the same collection. The unexpected result is that all elements of numbers1 are deleted. For workarounds, see Example and Example Example SELECT BULK COLLECT INTO Statement with Unexpected Results. Example uses a cursor to achieve the result intended by Example Example Workaround for Example Using a Cursor. Example selects specific values from a collection, numbers1and then stores them in a different collection, numbers2.

Example performs faster than Example Example Workaround for Example Using a Second Collection. These data types act an investor who exercises a call option on an index must like the native floating-point types on many hardware systems, with semantics derived from the IEEE floating-point standard.

The way these data types represent decimal data make them less suitable for financial applications, where precise representation of fractional amounts is more important than pure performance. Within a package, you can write overloaded versions of subprograms that accept different numeric parameters. Some programs a general-purpose report writer for example must build and process a variety of SQL statements, where the exact text of the statement is unknown until run time.

Such statements probably dollar to euro exchange rate forecast from execution to execution. They are called dynamic SQL statements. The following example declares a cursor variable, then associates it with a dynamic SELECT statement:. By default, OUT and IN OUT parameters are passed by value.

The values of any IN OUT parameters are copied before the subprogram is executed. During subprogram execution, temporary variables hold the apa itu trading forex binary parameter values.

If the subprogram exits normally, these values are copied to the actual parameters. If the subprogram exits with an unhandled exception, the original parameters are unchanged. When the parameters represent large data structures such as collections, records, and trade futures forex of free forex welcome bonus 2013 types, this copying slows down execution and uses up memory.

In particular, this overhead applies to each call to an object method: If the subprogram exits normally, the action is the same as normal. If the subprogram exits early with an exception, the values of OUT tactical stock for chinese sks IN OUT parameters or object attributes might still change.

To use this technique, ensure that the subprogram earn money in your spare time all exceptions. Example loads 25, records into a local nested table, which is make money gay mens webcams to two local procedures that do nothing.

A call to the subprogram that uses NOCOPY takes much less time. The use of NOCOPY increases the likelihood of parameter aliasing. Remember, NOCOPY is a hint, not a directive. The actual parameter is an element of an associative array.

This restriction does not apply if the parameter is an entire associative array. The actual parameter is constrained, such as by scale or NOT NULL. This restriction does not apply to size-constrained character strings. This restriction options fees ameritrade not extend to constrained elements or attributes of composite types. The actual and formal parameters are records, the actual parameter was declared implicitly as the index of a cursor FOR loop, and constraints on corresponding fields in the records differ.

Natively compiled program units work in all server environments, including shared server configuration formerly called "multithreaded server" and Oracle Real Application Clusters Oracle RAC. On some platforms, the DBA might want to do some optional configuration. Oracle Database Administrator's Guide for information about configuring a database.

While you are debugging program units and recompiling them frequently, interpreted mode has these advantages:.

Examples are data warehouse applications and applications with extensive server-side transformations of data for display. When many program units typically over 15, are compiled for native execution, and are simultaneously active, the large amount of shared memory required might affect system performance. The native code need not be interpreted at run time, so it runs faster. The compiled code and the interpreted code make the same library calls, so their action is exactly the same.

Regardless of how many sessions call the program unit, shared memory has only one copy it. If a program unit is not being used, the shared memory it is using might be freed, to reduce memory load. PLSQL native compilation works transparently in a Oracle Real Application Clusters Oracle RAC environment.

The next time the same subprogram is called, the database recompiles the subprogram automatically. During the conversion to native compilation, TYPE specifications are not recompiled by dbmsupgnv.

Package specifications seldom contain executable code so the run-time benefits of compiling to NATIVE are not measurable. You can use the TRUE command-line parameter with the discount online options brokers canada. When converting to interpreted compilation, the dbmsupgin.

Shut down all of the Application services including the Forms Processes, Web Servers, Reports Servers, and Concurrent Manager Servers. Easy way to earn gold wow shutting down all of the Application services, ensure that all of the connections to the database were terminated. Shut down the database in normal or immediate mode as the user SYS.

See the Oracle Database Administrator's Guide. If the database is using a server parameter file, then set this after the database has started. However, it does affect all subsequently compiled units, so explicitly set it to the compilation type that you want.

Associative binary operation - Groupprops

Start up the database in upgrade mode, using the UPGRADE option. You can save the output of the query for future reference with the SQL SPOOL statement:. This process also invalidates the units. Use TRUE with the script to exclude package specifications; FALSE to include the package specifications. This update must be done when the database is in UPGRADE mode. The script is guaranteed i hate sal the stockbroker complete successfully or rollback all the changes.

Before you run the utlrp. You can ensure this with the following statement:. See the comments in the script for information about setting the degree explicitly. If for any reason the script is abnormally terminated, rerun the utlrp. Reexecute the query in step 6. If recompiling with dbmsupgnv.

If recompiling with dbmsupgin. Disable the restricted session mode for the database, then start the services that you previously shut down.

To disable restricted session mode, use the following statement:. This section explains how to chain together special kinds of functions known as pipelined table functions. These functions are used in situations such as data warehousing to apply multiple transformations to data. You invoke the table function as the operand of the table operator in the FROM list of a SQL SELECT statement. It is also possible to invoke a table function as a SELECT list item; here, you do not use the table operator.

A table function can take a collection of rows as input. Execution of a table function can be parallelized, and returned rows can be streamed directly to the next process without intermediate staging. Rows from a collection returned by a table function can also be pipelined, that is, eex trading days returned as they are produced, instead of in a batch after all processing of the table function's input is completed.

By improving query response time: Stock market trend lines non-pipelined table functions, the entire collection returned by a table function must be constructed and returned to the server before the query can return a single result row. Pipelining enables rows to be returned iteratively, as they are produced. This also reduces the memory that a table function requires, as the object cache need not materialize the entire collection.

By iteratively providing result rows from the collection returned by a table function as the rows are produced instead of waiting until the entire collection is staged in tables or memory and then returning the entire collection. You declare a pipelined table function by specifying the PIPELINED keyword.

Pipelined functions can be defined at the schema level with CREATE FUNCTION or in a package. The PIPELINED keyword indicates that the function returns rows iteratively.

The return type of the pipelined table function must be a supported collection type, such as a nested table or a varray. This collection type can be declared at the schema level or inside a package. Inside the function, you return individual elements of the collection type. The elements of the collection type must be supported SQL data types, such as NUMBER and VARCHAR2. Example Assigning the Result of a Table Function. A pipelined table function can accept any argument that regular functions accept.

A table function that accepts a REF CURSOR as an argument can serve as a transformation function. That is, it can use the REF CURSOR to fetch the input rows, perform some transformation on them, and then pipeline the results out. Example Using a Pipelined Table Function For a Transformation. The function produces two output rows collection elements for each input row. When a CURSOR subquery is passed from SQL to a REF CURSOR function argument as in Examplethe referenced cursor is already open when the function begins executing.

The PIPE ROW statement may be used only in the body of pipelined table functions; an exception is raised if it is used anywhere else. The PIPE ROW statement can be omitted for a pipelined table function that returns no rows.

A pipelined table function may have a RETURN statement that does not return a value. If a table function is part of an autonomous transaction, it must COMMIT or ROLLBACK before each PIPE ROW statement, to avoid an error in the calling subprogram.

The database has three special SQL data types that enable you to dynamically encapsulate and access type descriptions, data instances, and sets of data instances of any other SQL type, including object and collection types. You can also use these three special types to create unnamed types, including anonymous collection types. The types are SYS. ANYDATAand SYS. ANYDATA type can be useful in some situations as a return value from table functions. For example, the following statement pipelines results from function g to function f:.

Parallel execution works similarly except that each function executes in a different process or set of processes. Multiple calls to a pipelined table function, either within the same query or in separate queries result in multiple executions of the underlying implementation. By default, there is no buffering or reuse of rows.

If the function is not really deterministic, results are unpredictable. Cursors over table functions have the same fetch semantics as ordinary cursors. REF CURSOR assignments based on table functions do not have any special semantics.

This is so even ignoring the overhead associated with executing two SQL statements and assuming that the results can be pipelined between the two statements. In the preceding example, the CURSOR keyword causes the results of a subquery to be passed as a REF CURSOR parameter. Also, if you are using a strong REF CURSOR type as an argument to a table function, then the actual type of the REF CURSOR argument must match the column type, or an error is generated. Weak REF CURSOR arguments to table functions can only be partitioned using the PARTITION BY ANY clause.

You cannot use range or hash partitioning for weak REF CURSOR arguments. For more information about cursor variables, see Declaring REF CURSOR Types and Cursor Variables.

Example Using Multiple REF CURSOR Input Variables. You can pass table function return values to other table functions by creating a REF CURSOR that iterates over the returned data:. You can explicitly open a REF CURSOR for a query and pass it as a parameter to a table function:.

In this case, the table function closes the cursor when it completes, so your program must not explicitly try to close the cursor. A table function can compute aggregate results using the input ref cursor. Example computes a weighted average by iterating over a set of input rows.

Example Using a Pipelined Table Function as an Aggregate Function.

During parallel execution, each instance of the table function creates an independent transaction. Pipelined table functions cannot be the target table in UPDATEINSERTor DELETE statements. For example, the following statements will raise an exception:. However, you can create a view over a table function and use INSTEAD OF triggers to update it. The following INSTEAD OF trigger fires when the user inserts a row into the BookTable view:. INSTEAD OF triggers can be defined for all DML operations on a view built on a table function.

Exception handling in pipelined table functions works just as it does with regular functions. Some languages, such as C and Java, provide a mechanism for user-supplied exception handling. If an exception raised within a table function is handled, the table function executes the exception handler and continues processing. Exiting the exception handler takes control to the enclosing scope. If the exception is cleared, execution proceeds normally. If SQL statements are slowing down your program: Analyze the execution plans and performance of the SQL statements, using: EXPLAIN PLAN statement SQL Trace facility with TKPROF utility Rewrite the SQL statements if necessary.

Make Function Calls as Efficient as Possible Badly written subprograms for example, a slow sort or search function can harm performance. Avoid unnecessary calls to subprograms, and optimize their code: Declare VARCHAR2 Variables of or More Characters Group Related Subprograms into Packages Pin Packages in the Shared Memory Pool Apply Advice of Compiler Warnings Declare VARCHAR2 Variables of or More Characters You might need to allocate large VARCHAR2 variables when you are not sure how big an expression result will be.

Group Related Subprograms into Packages When you call a packaged subprogram for the first time, the whole package is loaded into the shared memory pool.

You must have CREATE privileges on the units to be profiled. Saves run-time statistics in database tables, which you can query. You can specify the subprograms to trace and the tracing level. Requires no special source or compile-time preparation. Using the Profiler API: You can use Profiler only on units for which you have CREATE privilege. Using the Trace API: Optional Limit tracing to specific subprograms and choose a tracing level. Start the tracing session. Stop the tracing session.

Parallel DML is disabled with bulk binds.

Running One DML Statement Multiple Times FORALL Statement The keyword FORALL lets you run multiple DML statements very efficiently. Example Issuing INSERT Statements in a Loop CREATE TABLE parts1 pnum INTEGER, pname VARCHAR2 15 ; CREATE TABLE parts2 pnum INTEGER, pname VARCHAR2 15 ; DECLARE TYPE NumTab IS TABLE OF parts1. FOR i IN INDICES OF to iterate through actual subscripts, -- rather than How FORALL Affects Rollbacks In a FORALL statement, if any execution of the SQL statement raises an unhandled exception, all database changes made during previous executions are rolled back.

LAST -- Run 3 UPDATE statements. FOR i IN depts. COUNT LOOP -- Count how many rows were inserted for each department; that is, -- how many employees are in each department. Each record has two fields: Example Bulk Operation that Continues Despite Exceptions -- Temporary table for this example: Retrieving Query Results into Collections BULK COLLECT Clause Using the BULK COLLECT clause with a query is a very efficient way to retrieve the result set. Example loads two entire database columns into nested tables.

Example Retrieving Query Results with BULK COLLECT DECLARE TYPE NumTab IS TABLE OF employees. Example Using the Pseudocolumn ROWNUM to Limit Query Results DECLARE TYPE SalList IS TABLE OF employees. Examples of Bulk Fetching from a Cursor You can fetch from a cursor into one or more collections as shown in Example Example Bulk-Fetching from a Cursor Into One or More Collections DECLARE TYPE NameList IS TABLE OF employees.

Limiting Rows for a Bulk FETCH Operation LIMIT Clause The optional LIMIT clause, allowed only in bulk FETCH statements, limits the number of rows fetched from the database. Retrieving DML Results Into a Collection RETURNING INTO Clause You can use the BULK COLLECT clause in the RETURNING INTO clause of an INSERTUPDATEor DELETE statement.

Using FORALL and BULK COLLECT Together You can combine the BULK COLLECT clause with a FORALL statement. DECLARE BEGIN -- Assume that values were assigned to host array -- and host variables in host environment FORALL i IN: SELECT BULK COLLECT INTO Statements and Aliasing In a statement of the form SELECT column BULK COLLECT INTO collection FROM table COUNT ; 12 13 FOR j IN COUNT ; 23 24 IF numbers1.

COUNT ; 15 16 FOR j IN COUNT ; 28 29 IF numbers1. COUNT ; 36 37 IF numbers2. Tuning Dynamic SQL with EXECUTE IMMEDIATE Statement and Cursor Variables Some programs a general-purpose report writer for example must build and process a variety of SQL statements, where the exact text of the statement is unknown until run time.

Associative binary Operators

The following example declares a cursor variable, then associates it with a dynamic SELECT statement: Oracle Database Administrator's Guide for information about configuring a database Platform-specific configuration documentation for your platform. While you are debugging program units and recompiling them frequently, interpreted mode has these advantages: Compiling for interpreted mode is faster than compiling for native mode.

Natively compiled subprograms and interpreted subprograms can call each other. The following procedure describes the conversion to native compilation. Skip the first step. Performing Multiple Transformations with Pipelined Table Functions This section explains how to chain together special kinds of functions known as pipelined table functions. A pipelined table function cannot be run over a database link.

The reason is that the return type of a pipelined table function is a SQL user-defined type, which can be used only within a single database as explained in Oracle Database Object-Relational Developer's Guide. When rows from a collection returned by a table function are pipelined, the pipelined function always references the current state of the data.

After opening the cursor on the collection, if the data in the collection is changed, then the change is reflected in the cursor. Writing a Pipelined Table Function You declare a pipelined table function by specifying the PIPELINED keyword. Using Pipelined Table Functions for Transformations A pipelined table function can accept any argument that regular functions accept.

For example, the following statement pipelines results from function g to function f: Optimizing Multiple Calls to Pipelined Table Functions Multiple calls to a pipelined table function, either within the same query or in separate queries result in multiple executions of the underlying implementation. Performing DML Operations on Pipelined Table Functions Pipelined table functions cannot be the target table in UPDATEINSERTor DELETE statements.

For example, the following statements will raise an exception: VALUES 'any', 'thing' ; However, you can create a view over a table function and use INSTEAD OF triggers to update it.

CREATE VIEW BookTable AS SELECT x. Author FROM TABLE GetBooks 'data. Handling Exceptions in Pipelined Table Functions Exception handling in pipelined table functions works just as it does with regular functions. An unhandled exception in a table function causes the parent transaction to roll back.

Associative propertyTraces the order in which subprograms execute.